Fault Tolerant Smart Contracts with Circuit Breakers

Saturday, 25 July 2020 · 60 min read · ethereum solidity

Software systems are becoming more and more interconnected. Service-oriented architecture transforms software into smaller, independently deployable units that communicate over the network. APIs are eating the world, rapidly becoming the primary interface for business. Smart contracts go a step further - creating public, immutable protocols that anyone can run without permission.

Software is being broken down into and being offered as modular services. It’s innovation legos on steroids: By composing multiple building blocks together we can bootstrap new ventures much more rapidly at lower cost.

However, this new world of distributed systems introduces its own challenges. What happens if a service on a critical path fails? Can the system recover from faults? Or will a single failure cascade to downstream services in a catastrophic explosion of fire and death?

In the world of smart contracts, faults can be an extraordinarily expensive affair. With DeFi continuing to grow - can we do better, somehow?

In an ultra-interconnected system, fault tolerance is key. The COVID-19 pandemic showed everyone the importance of resiliency in the real world. A fault-tolerant design enables a system to continue its intended operation, possibly at a reduced level, rather than failing completely, when some part of the system fails.

In this article, we’ll explore:

- The dangers of cascading failure in service oriented architectures,

- Fault tolerance and resiliency patterns,

- How circuit breakers can improve fault tolerance in smart contracts, and

- An implementation of a Circuit Breaker in Solidity.

The

circuit-breakersSolidity code is open source and available on Github. Read on to learn why we need circuit breakers and how it works.

📬 Get updates straight to your inbox.

Subscribe to my newsletter so you don't miss new content.

The Dangers of Cascading Failure

In a service oriented architecture, multiple services collaborate in handling requests. When one service calls another there’s always the risk that the other service is temporarily unavailable or faulty.

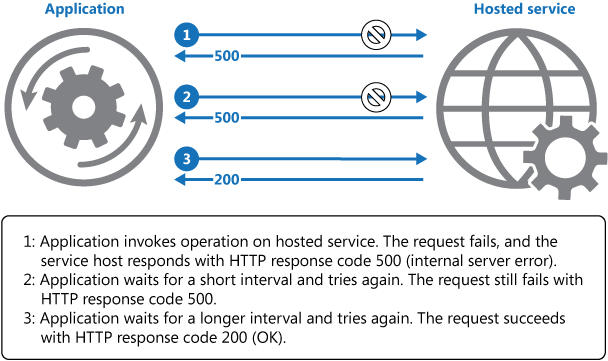

Calls to services can fail due to transient faults, such as slow network connections, timeouts, or the resources being overcommitted or temporarily unavailable. Some faults correct themselves after a short period of time, and a robust system should be prepared to handle them by using a strategy such as retrying.

However, there can be situations where faults are due to the complete failure of a service, which takes much longer to fix. The failure of one service can potentially cascade to the rest of the system. In these situations it might be pointless for an application to continually retry an operation that is unlikely to succeed, and instead the application should quickly accept that the operation has failed and handle this failure accordingly.

Achieving Fault Tolerance

A fault-tolerant design enables a system to continue its intended operation, possibly at a reduced level, rather than failing completely, when some part of the system fails.

In our pursuit of fault tolerance, we want:

-

No single point of failure – If a system experiences a failure, it must continue to operate without interruption during the repair process.

-

Fault isolation to the failing component – When a failure occurs, the system must be able to isolate the failure to the offending component. This requires failure detection mechanisms that exist only for the purpose of fault isolation.

-

Fault containment to prevent cascading failures – Some failure mechanisms can cause a system to fail by propagating the failure to the rest of the system.

Achieving all three properties qualifies a system to be fault tolerant.

Resilience Patterns in Distributed Systems

Let’s look at three design patterns that increase fault tolerance in distributed systems: Circuit Breakers, Graceful Degradation, and Retries. While these patterns are more commonly used in traditional service oriented architectures, we’ll see if we can apply the same principles for smart contracts.

Resiliency Pattern 1: Circuit Breakers

The Circuit Breaker pattern prevents an application from repeatedly performing an operation that is likely to fail.

Household circuit breakers are designed to protect an electrical circuit from damage caused by excess current from an overload or short circuit.

Its basic function is to interrupt current flow after a fault is detected, preventing fires. This same concept could be applied to distributed systems.

The Circuit Breaker pattern also enables a system to detect whether the fault has been resolved. If the problem appears to have been fixed, the system can try to invoke the operation.

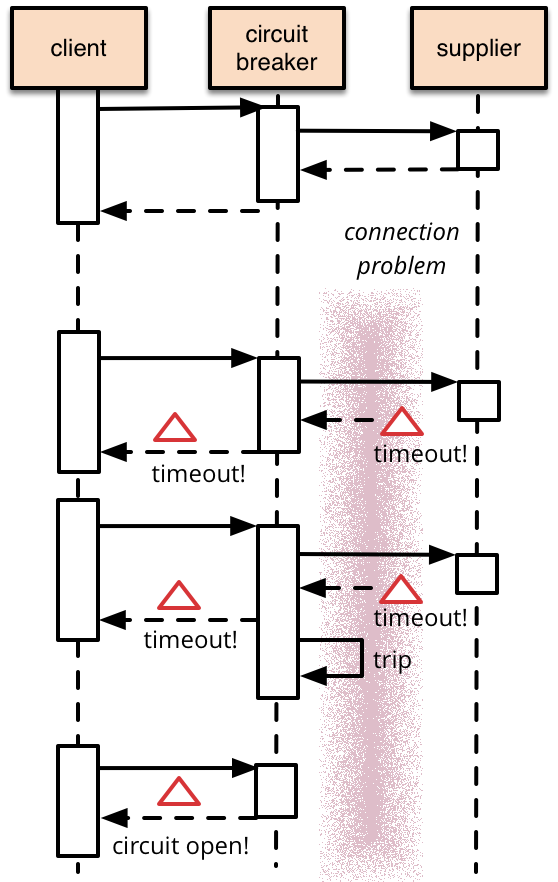

In the Circuit Breaker pattern, you wrap a protected function call in a circuit breaker object, which monitors failures. When the number of consecutive failures crosses a threshold, the circuit breaker trips, and all further calls to the circuit breaker return with an error, without the protected call being made at all.

After the timeout expires the circuit breaker allows a limited number of test requests to pass through. If those requests succeed the circuit breaker resumes normal operation. Otherwise, if there is a failure the timeout period begins again.

A circuit breaker acts as a proxy for operations that might fail. The proxy monitors the number of recent failures that have occurred, and use this information to decide whether to allow the operation to proceed, or simply return immediately.

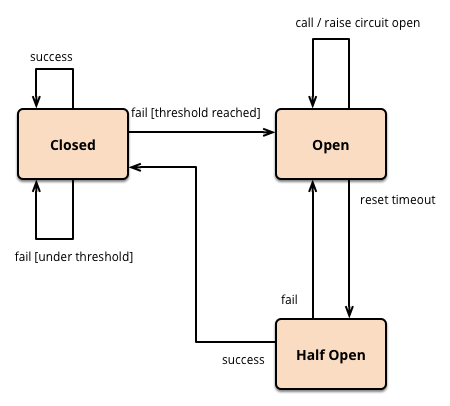

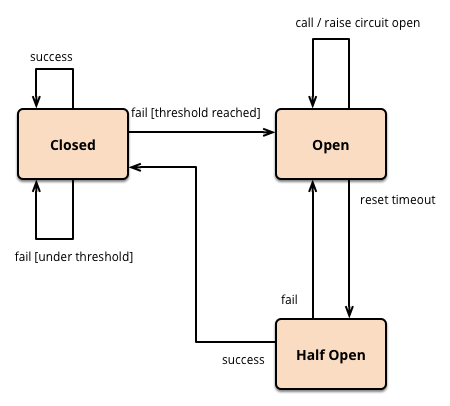

The Circuit Breaker can be implemented as a state machine with the following states that mimic the functionality of an electrical circuit breaker:

-

Closed: Incoming requests are routed to the service as per normal. The circuit breaker maintains a count of the number of recent failures, and if the call to the service is unsuccessful the circuit breaker increments this count. If the number of recent failures exceeds a specified threshold within a given time period, the circuit breaker is placed into the Open state.

-

Open: Incoming requests are returned immediately. No calls are made to the failing service. At this point the circuit breaker starts a cooldown timer, and when this cooldown expires the circuit breaker is placed into the Half-Open state.

-

Half-Open: A limited number of requests are allowed to pass through and call the service. If these requests are successful, it’s assumed that the fault has been fixed and the circuit breaker switches back to the Closed state (the failure counter and cooldowns are reset). If any request fails, the circuit breaker assumes that the fault is still present so it reverts back to the Open state and restarts the timeout timer to give the system more time to recover from the fault.

If the circuit breaker raises an event each time it changes state, this information can be used to monitor the health of the part of the system protected by the circuit breaker.

To summarize, Circuit Breakers provides stability while the system recovers from a failure and minimizes the impact on the rest of the system. It can help to keep the system running by quickly rejecting a request for an operation that’s likely to fail, rather than waiting for the operation to time out, or never return.

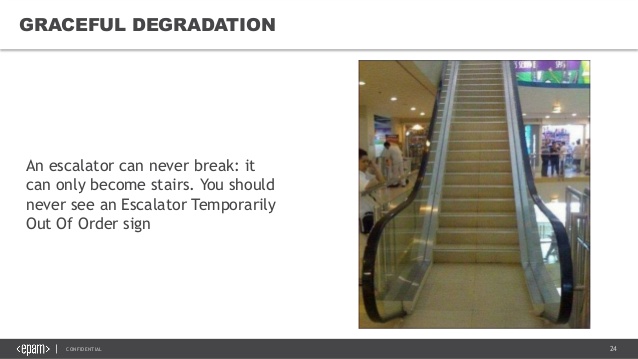

Resiliency Pattern 2: Graceful Degradation

A system that is designed for graceful degradation operates at a reduced level of performance if a critical component fails. For example, a broken escalator can still be used as stairs. This concept is also known as redundancy.

In practice, graceful degradation can consist of backup components which automatically “kick in” if the primary component fails. For example, hospitals keep backup generators that provide emergency power during natural disasters. Fallback components perform limited functionality while the rest of system slowly returns to normal, keeping things running instead of failing completely.

Resiliency Pattern 3: Retries

The Retry pattern says that you can retry a connection automatically which has failed earlier due to an exception. This is effective in the case of transient or self-correcting issues.

The purpose of the Circuit Breaker pattern is different than the Retry pattern. The Retry pattern enables an application to retry an operation in the expectation that it’ll succeed. The Circuit Breaker pattern prevents an application from performing an operation that is likely to fail.

We can combine these two patterns by using the Retry pattern to invoke an operation through a circuit breaker. However, the retry logic should be sensitive to any exceptions returned by the circuit breaker and abandon retry attempts if the circuit breaker indicates that a fault is not transient.

The period between retries should be chosen to spread requests as evenly as possible. This reduces the chance of a busy service continuing to be overloaded. If we continually overwhelm a service with retry requests, it’ll take the service longer to recover.

If the request still fails, the system can wait and make another attempt. If necessary, this process can be repeated with increasing delays between retry attempts, until some maximum number of requests have been attempted. The delay can be increased incrementally or exponentially, depending on the type of failure and the probability that it’ll be corrected during this time.

Note that Retries are not helpful when a fault is likely to be long lasting or for handling failures that aren’t due to transient faults, such as internal exceptions caused by errors in the business logic of an application. If a request still fails after a significant number of retries, it’s better to prevent further requests and report a failure immediately using the Circuit Breaker pattern.

DeFi: Avoiding Cascading Failure in Smart Contracts

Let’s loop back to the world of smart contracts. We’ve been talking about failures in traditional service oriented architectures, but smart contracts are just as vulnerable to cascading failures, if not more so!

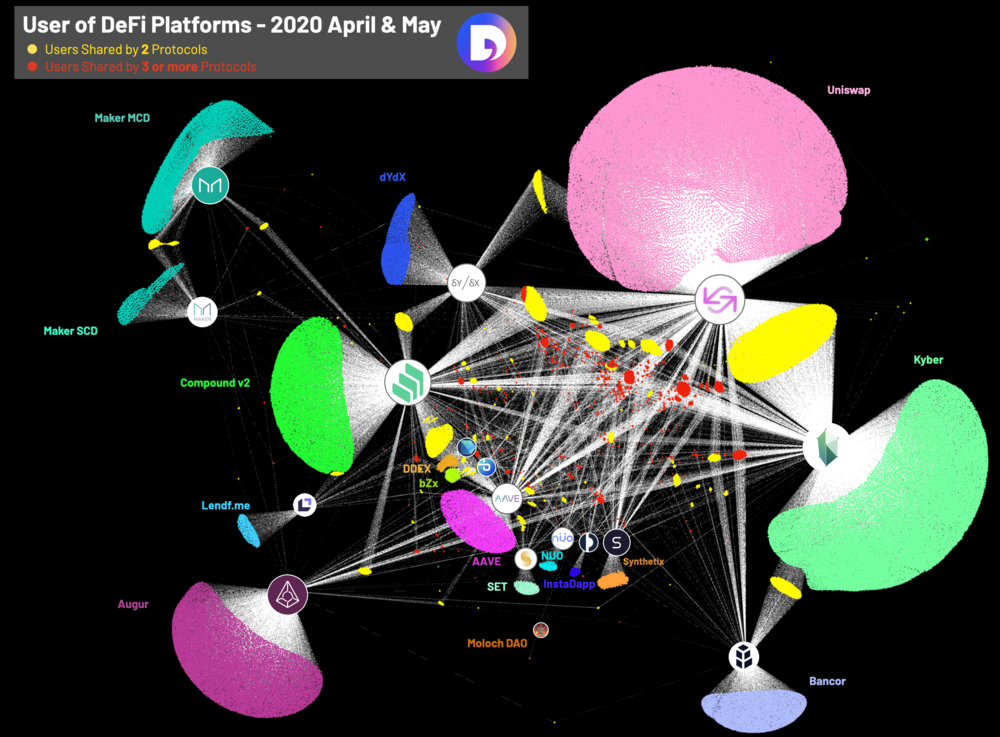

With DeFi’s TVL currently close to USD$4B and growing, you can’t help but be cautious. DeFi projects are building an interlocking financial system on top of smart contracts. This poses a potentially systemic risk arising from the interdependencies of different DeFi protocols. For example, much of DeFi rely on MakerDAO’s stablecoin protocol and money market protocols such as Compound as building blocks.

Similar to microservices, a handful of smart contracts are on the critical path of other DeFi protocols. Bugs in any of these critical contracts could break downstream protocols, leading to painful upgrade paths or locked funds in the worst case!

We want to avoid and mitigate the above scenario.

Fault Tolerant Contract Test Case

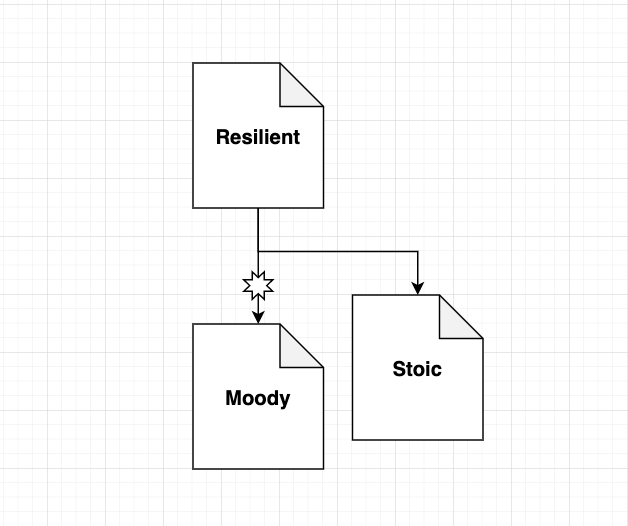

Let’s create a simple fault tolerant test case. Imagine a smart contract architecture with three contracts Moody, Stoic, and Resilient:

Both Moody and Stoic are IHuman contracts which implements speak():

// interfaces/IHuman.sol

interface IHuman {

function speak() external view returns (string memory);

}The Resilient contract calls Moody. In other words, Moody is on Resilient’s critical path. If the call to Moody.speak() ever reverts, Resilient.ask() will fail and stop working as expected:

// Resilient.sol

contract Resilient {

IHuman public primary;

IHuman public secondary;

constructor(

IHuman _primary,

IHuman _secondary

) public {

primary = _primary;

secondary = _secondary;

}

function ask() external view returns (string memory) {

return primary.speak(); // Calls Moody, could fail and revert

}

}In addition, Moody (as its name suggests) can enter a ‘moody’ state. In this state, all calls to Moody will always revert:

// Moody.sol

contract Moody is IHuman {

bool public isMoody = false;

string public greeting;

constructor(

string memory _greeting

) public {

greeting = _greeting;

}

function toggleMood() external {

isMoody = !isMoody;

}

function speak() external override view returns (string memory) {

require(!isMoody, "REeEeEeEeEeEeEeEeEeEeEeEe"); // Always reverts if isMoody

return greeting;

}

}Whenever the isMoody flag is true, any call to Moody will always revert. In contrast to Moody, Stoic always responds successfully. Perhaps we can use Stoic as a passive backup for Resilient:

contract Stoic is IHuman {

string public greeting;

constructor(

string memory _greeting

) public {

greeting = _greeting;

}

function speak() external override view returns (string memory) {

return greeting;

}

}For the rest of this article, we’ll use the above contracts as a test case. Our goal is to make Resilient fault tolerant to any failures in Moody by using circuit breakers.

Implementing Circuit Breakers in Smart Contracts

Now, let’s bring together the resiliency patterns we’ve learned and translate them into smart contracts. We’ll use the Circuit Breaker pattern to add fault tolerance to our smart contract system.

Handling Errors with Try Catch in Solidity

The try/catch syntax introduced in Solidity 0.6.0 is arguably the biggest leap in error handling capabilities in smart contracts, since reason strings for revert and require were released in v0.4.22. With it, we can add graceful degradation in our code like so:

function ask() external view returns (string memory) {

try primary.speak() returns (string memory greeting)

{

return greeting;

} catch {

return secondary.speak();

}

}In the above code:

- We first try to call

primary.speak()and return the result if it succeeds. - Otherwise if

primaryfails, we return the result ofsecondary.speak()instead.

If execution reaches a catch-block, then the state-changing effects of the external call have been reverted. If execution reaches the success block, the effects were NOT reverted. If the effects have been reverted, then execution continues in the catch block.

Solidity supports different kinds of catch blocks depending on the type of error:

catch Error(string memory reason)catch (bytes memory lowLevelData)catch

If an error was caused by require(false, "reasonString"), then the catch clause of the type catch Error(string memory reason) will be executed:

function ask() external view returns (string memory) {

try primary.speak() returns (string memory greeting)

{

return greeting;

} catch Error(string memory reason) {

// This is executed in case

// revert was called inside getData

// and a reason string was provided.

return secondary.speak();

} catch {

return secondary.speak();

}

}The clause catch (bytes memory lowLevelData) is executed if the error signature does not match any other clause, there was an error during decoding of the error message, if there was a failing assertion in the external call (for example due to a division by zero or a failing assert()) or if no error data was provided with the exception. You get access to the low-level error data in that case:

function ask() external view returns (string memory) {

try primary.speak() returns (string memory greeting)

{

return greeting;

} catch Error(string memory reason) {

// This is executed in case

// revert was called inside getData

// and a reason string was provided.

return secondary.speak();

} catch (bytes memory /*lowLevelData*/) {

// This is executed in case revert() was used

// or there was a failing assertion, division

// by zero, etc. inside getData.

return secondary.speak();

}

}If you don’t care about handling the error data, a catch { ... } as the only catch clause is enough:

function ask() external view returns (string memory) {

try primary.speak() returns (string memory greeting)

{

return greeting;

} catch {

return secondary.speak();

}

}In order to catch all error cases, you have to have at least the clause

catch { }or the clausecatch (bytes memory lowLevelData) { }.

Note that with try/catch, only exceptions happening inside the external call itself are caught. Errors inside the expression are not caught. For example, if we had a failing require() statement within the ask() function, that will not be caught in the try/catch statement. The try block following the call is considered to be the success block. Whatever exception inside this block is not caught.

Full Circuit Breaker Code

OK, so try catch works for basic error handling - can we do better by adding a circuit breaker?

Recall that we want to implement the following state machine in Solidity:

Here’s a Circuit Breaker Solidity interface:

interface CircuitBreaker {

event Opened(uint retryAt);

event Closed();

struct Breaker {

Status status; // OPEN or CLOSED (HalfOpen is a subset of OPEN)

uint8 failureCount; // Counter for number of failed calls

uint8 failureTreshold; // When failure count >= treshold, trip / open the breaker

uint cooldown; // How long after a trip before the breaker is half-opened (in seconds)

uint retryAt; // Unix timestamp when breaker is half-opened (in seconds)

}

function success();

function fail();

function breaker() internal view returns (Breaker);

function isClosed() internal view returns (bool);

function isOpen() internal view returns (bool);

function isHalfOpen() internal view returns (bool);

}The Breaker struct stores all the state we need to monitor failures and the circuit breaker:

status,failureCount, andfailureTresholdlets us check if the breaker is OPEN or CLOSEd.retryAt, andcooldownlets us check if the breaker is HALFOPEN.

And here’s the complete CircuitBreaker Solidity library code:

pragma solidity ^0.6.12;

/**

* @dev Circuit breaker to monitor and handle for external failures.

*

* These functions can be used to:

* - Track failed and successful external calls

* - Trip a circuit breaker when a failure treshold is reached

* - Reset a circuit breaker when a cooldown timer is met

*/

library CircuitBreaker {

enum Status { CLOSED, OPEN }

event Opened(uint retryAt);

event Closed();

struct Breaker {

Status status; // OPEN or CLOSED

uint8 failureCount; // Counter for number of failed calls

uint8 failureTreshold; // When failure count >= treshold, trip / open the breaker

uint cooldown; // How long after a trip before the breaker is half-opened (in seconds)

uint retryAt; // Unix timestamp when breaker is half-opened (in seconds)

}

/**

* @dev Returns a new Circuit Breaker.

*/

function build(uint8 _failureTreshold, uint _cooldown) internal pure returns (Breaker memory) {

require(_failureTreshold > 0, 'Breaker failure treshold must be greater than zero.');

require(_cooldown > 0, 'Breaker cooldown must be greater than zero.');

return Breaker(

Status.CLOSED,

0,

_failureTreshold,

_cooldown,

0

);

}

/**

* @dev Tracks a successful function call.

*/

function success(Breaker storage self) internal {

if (_canReset(self)) _reset(self);

}

/**

* @dev Returns true if breaker can be reset.

*/

function _canReset(Breaker storage self) private view returns (bool) {

return isHalfOpen(self);

}

/**

* @dev Resets the circuit breaker. HALFOPEN -> CLOSED

*/

function _reset(Breaker storage self) private {

self.status = Status.CLOSED;

self.failureCount = 0;

emit Closed();

}

/**

* @dev Tracks a failed function call.

*/

function fail(Breaker storage self) internal {

self.failureCount++;

if (_canTrip(self)) _trip(self);

}

/**

* @dev Returns true if breaker can be tripped.

*/

function _canTrip(Breaker storage self) private view returns (bool) {

return (isClosed(self) && self.failureCount >= self.failureTreshold) || isHalfOpen(self);

}

/**

* @dev Trips the circuit breaker. CLOSED / HALFOPEN -> OPEN

*/

function _trip(Breaker storage self) private {

self.status = Status.OPEN;

self.retryAt = now + self.cooldown;

emit Opened(self.retryAt);

}

function isClosed(Breaker storage self) internal view returns (bool) {

return self.status == Status.CLOSED;

}

function isOpen(Breaker storage self) internal view returns (bool) {

return self.status == Status.OPEN && now < self.retryAt; // Waiting for retry cooldown

}

function isHalfOpen(Breaker storage self) internal view returns (bool) {

return self.status == Status.OPEN && now >= self.retryAt; // Has passed retry cooldown

}

}The two most important functions in CircuitBreaker are success() and fail(). They let you log successes and failures, while the rest of the code does the heavy lifting of determining the current state. The current state helps you decide when to retry a failed external call.

Don’t worry if you’re still unsure how the Circuit Breaker works at this point. Next, let’s see how you can use the CircuitBreaker library and its functions.

Using Circuit Breakers

First, import CircuitBreaker in your contract:

import "./CircuitBreaker.sol";

contract Resilient {

using CircuitBreaker for CircuitBreaker.Breaker;

...

}Then instantiate a circuit breaker:

contract Resilient{

CircuitBreaker.Breaker public breaker;

constructor(

uint8 _treshold,

uint256 _cooldown

) public {

breaker = CircuitBreaker.build(_treshold, _cooldown);

}

}CircuitBreaker.build() takes in two parameters:

_thresholdis the minimum number of consecutive failures required for the circuit breaker to trip._cooldownis the number of seconds before a tripped breaker can attempt another retry.

Once instantiated, we can use it in our contract functions:

function safeAsk() external returns (string memory) {

if (breaker.isOpen()) return secondary.speak();

// When breaker is half opened or closed, try primary

try primary.speak() returns (string memory greeting)

{

breaker.success(); // Notify breaker of success

return greeting;

} catch {

breaker.fail(); // Notify breaker of failure

return secondary.speak();

}

}In the above safeAsk() function:

- We call

breaker.success()when the external callprimary.speak()is successful. - We call

breaker.fail()when the external call fails and reverts. This increments thefailureCountin our circuit breaker. The breaker will trip if it reaches thefailureTreshold. - We check

breaker.isOpen()to find out if we’ve made too many failed calls (>failureTreshold). - When the breaker is open, we route any calls to our

secondarybackup until enoughcooldowntime has passed before wetryagain. - After the cooldown period has passed, the circuit will be in a half-open state, so

safeAsk()will try the external callprimary.speak()again.

Full Fault Tolerant Smart Contract Code

contract Resilient {

using CircuitBreaker for CircuitBreaker.Breaker;

IHuman public primary;

IHuman public secondary;

CircuitBreaker.Breaker public breaker;

constructor(

IHuman _primary,

IHuman _secondary,

uint8 _treshold,

uint256 _cooldown

) public {

primary = _primary;

secondary = _secondary;

breaker = CircuitBreaker.build(_treshold, _cooldown);

}

function safeAsk() external returns (string memory) {

if (breaker.isOpen()) return secondary.speak();

// When breaker is half opened or closed, try primary

try primary.speak() returns (string memory greeting)

{

breaker.success(); // Notify breaker of success

return greeting;

} catch {

breaker.fail(); // Notify breaker of failure

return secondary.speak();

}

}

}Open Design Considerations

Here are some design ideas and open questions I encountered while building Circuit Breakers in Solidity. I would appreciate your thoughts and feedback on these.

Decorator Syntax

Initially, I wanted to wrap the whole ask() function in a decorator like so to massively reduce boilerplate:

function safeAsk() external faultTolerant(breaker, secondary) returns (string memory) {

return primary.speak();

}However, the faultTolerant() modifier would need to generate the try catch block and return value mapping:

modifier faultTolerant(CircuitBreaker.Breaker breaker, address backup) {

// QUESTION: What should backup's type be?

// QUESTION: How do we know what backup functions to call?

if (breaker.isOpen()) return backup.speak();

// QUESTION: How can we tell the modifier to only wrap the external call?

// QUESTION: How do we map return values?

try _;

{

breaker.success();

return greeting;

} catch {

breaker.fail();

return backup.speak();

}

}I’m still not sure how this will work, or if it even can work. Right now, I don’t think it’s possible to have this syntactic sugar without low-level calls.

Alternative Treshold Strategies

The current CircuitBreaker implementation uses a simple way to trip the breaker — a failure counter that resets on a successful call.

An alternative strategy might be to look at frequency of errors, tripping once you get, say, a 50% failure rate. You could also have different thresholds for different errors, such as a threshold of 5 one type of error but 2 for another.

Another strategy might be to use Flag contracts to send signals to the circuit breaker.

Breaker introduces State Mutations to view functions

Without any breaker logic, Resilient’s ask() function could have been declared as view. However, adding circuit breaker logic with breaker.success() and breaker.fail() potentially modifies state. This forces us to specify safeAsk() and any function with a circuit breaker as a non-view function.

Reentrancy Vulnerability?

Since there are changes to state variables after calls to external functions, could circuit breakers be vulnerable to reentrancy?

try primary.speak() returns (string memory greeting) // external call

{

breaker.success(); // state change after external call

return greeting;

} catch {

breaker.fail(); // state change

string memory greeting = secondary.speak(); // external call

return greeting;

}Is it possible to game the circuit breaker state? For example, perhaps we can implement a malicious speak() function that calls ask() repeatedly, incrementing the breaker counter multiple times in a single transaction.

More security testing is needed here. In the meantime, I recommend wrapping a reentrancy guard around any function using CircuitBreaker to be safe.

Potential Use Case: Conditional Emergency Powers

One interesting use case for circuit breakers is to limit the need for all-encompassing admin powers. Today, some DeFi protocols reserves special admin privileges for their development team in case things go awry due to a bug or hack. This lets them move fast should things go wrong, but should these powers be available all the time?

Circuit breakers enable you to temporarily grant emergency powers for upgrade purposes. Instead of giving full admin permissions by default, we can grant emergency powers only when the situation arises - when a breaker trips. Like martial law, emergency powers should only be granted in an emergency!

In Closing

The

circuit-breakersSolidity code is open source and available on Github. Star the repo if you found it useful.

In an ultra-interconnected system, fault tolerance is key. A fault-tolerant design enables a system to continue its intended operation, possibly at a reduced level, rather than failing completely, when some part of the system fails.

As the DeFi space continues to grow rapidly, we should want to create fault tolerant smart contract systems. The Circuit Breaker pattern prevents an application from repeatedly performing an operation that is likely to fail.

In this article, we learned about:

- The dangers of cascading failure in service oriented architectures,

- Fault tolerance and resiliency patterns,

- How circuit breakers can improve fault tolerance in smart contracts, and

- An implementation of a Circuit Breaker in Solidity.

Congratulations on reading this far! This project is still a work in progress. It all started with me trying to figure out how and when to use Solidity’s new try-catch construct. I’m still not 100% sure I’m using it correctly. Please feel free to share your thoughts and feedback on Github or in the comments section below. Cheers.

📬 Get updates straight to your inbox.

Subscribe to my newsletter so you don't miss new content.